ElevenLabs in 2026: From Personal Voice Studio to Expressive AI Voice

It’s been several months since we shared how ElevenLabs became my personal voice studio — the place where written words suddenly gain humanity and resonance through AI voices. That era wasn’t just about converting text into audio. It was about sound with soul.

But like most things in AI, time doesn’t just pass — it accelerates.

Today, ElevenLabs isn’t just a neat tool for narrations and podcasts anymore. It’s positioning itself as one of the most expressive and emotionally intelligent text-to-speech engines available — thanks to a major update called Eleven v3 and a host of strategic developments that mark its evolution.

ElevenLabs V3: More Than Just a Voice Tuner

The latest version of ElevenLabs’ core text-to-speech model — Eleven v3 — is now generally available, and it’s very different from what came before. Whereas earlier versions emphasized naturalness and clarity, v3 shifts the goalposts toward expressiveness, emotional nuance, and performance-level delivery in generated speech.

Here’s what makes it stand out:

1. Emotional Control with Audio Tags

1. Emotional Control with Audio Tags

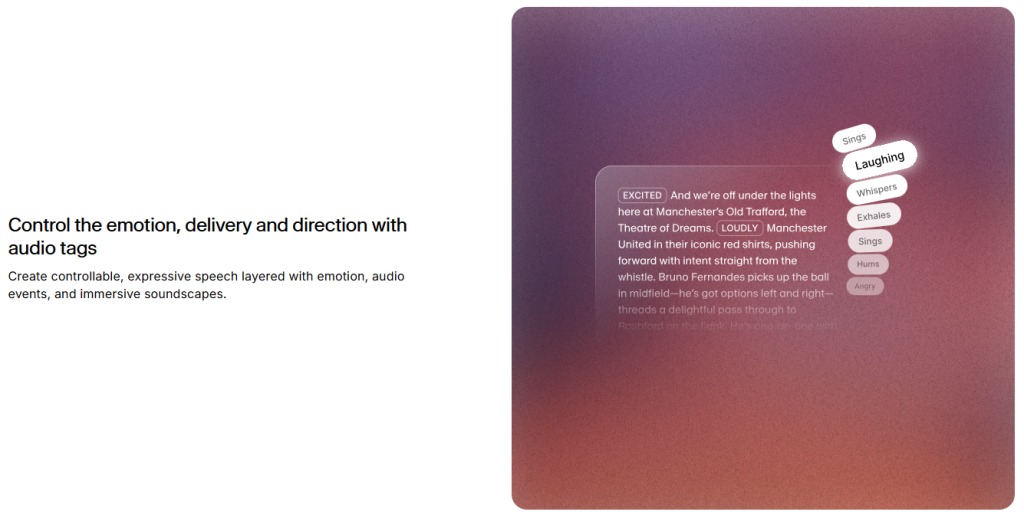

Eleven v3 introduces the concept of inline audio tags — simple annotations like [excited], [whispers], or [sighs] embedded directly in your script to guide how lines are spoken, not just what is spoken. This gives creators director-level control over voice delivery, making the output feel more like an acted performance than a machine reading.

2. Dialogue Mode & Multi-Speaker Support

2. Dialogue Mode & Multi-Speaker Support

Long gone are the days of generating isolated lines and stitching them together manually. V3 now supports Text to Dialogue, letting you define multiple speakers and create natural-flow conversations in one generation pass, complete with pacing and interruptions.

3. Expanded Language Support

3. Expanded Language Support

Eleven v3 supports 70+ languages, up from the previous multilingually focused versions — meaning global content creators can generate expressive speech in more contexts, dialects, and audiences than ever before.

4. Better Nuance, Damage Limits, and Expressiveness

4. Better Nuance, Damage Limits, and Expressiveness

V3 doesn’t just read text — it interprets it. Its deeper understanding of stress patterns, cadence, and emotional subtext gives otherwise ordinary text life and variation, which is critical for storytelling, audiobooks, and character-driven content.

What This Means for Everyday Creators

If you’ve used ElevenLabs before, you’ll notice a qualitative shift with v3:

- Voiceovers sound more alive

- Characters in dialogue feel more distinct

- Narration has emotion and rhythm

- Projects with multiple speakers are easier to produce

In short, ElevenLabs has moved from great TTS into expressive voice acting by AI — and that’s a different creative space entirely.

But there is a trade-off: v3 currently demands slightly more sophisticated prompting than earlier models if you want to unlock its full potential. For real-time conversation use cases (like live agents or voice chatbots), ElevenLabs still recommends its v2.5 Turbo or Flash models for now.

It’s Not Just Tech — It’s Ambition

Beyond the technical leaps, there’s also clear momentum:

- ElevenLabs recently secured a massive funding round, tripling its valuation to around $11 billion, signaling investor confidence in its vision.

- It’s continuing to expand its creative ecosystem beyond speech, including AI-generated music projects, partnerships with artists like Liza Minnelli and Art Garfunkel, and collaborations with creators like Matthew McConaughey.

This isn’t about one tool.

It’s about positioning voice AI as a core creative medium, shaping not just narration but the emotional fabric of modern storytelling.

If you’ve ever wondered how expressive an AI voice can get, this video answers that question.

Will ElevenLabs Replace Voice Actors?

Let’s be clear: no TTS model is set to fully replace professional voice talent any time soon — particularly for nuanced character work. But what v3 does is give creators tools to get closer to real emotional delivery than ever before.

That means more expressive audiobooks, more engaging tutorials, and more natural dialogue in interactive media — without the overhead of recording studios and contracts.

Final Thought

Looking back at where we were when I first wrote about ElevenLabs as my personal voice studio, it’s remarkable how far the technology has come — not just in quality, but in creative scope. The shift isn’t merely technical; it’s cultural. AI is no longer just reading text — it’s inhabiting it.

And as expressive models like Eleven v3 roll out, the question for creators becomes less about whether the tech is good enough and more about what stories you want to tell with it.